The VEMI Lab research human-computer interactions (HCI), studying topics about but not limited to autonomous vehicles, virtual reality, and multi-modal research. At the VEMI Lab my main responsibility was to create applications to support our research. I initially started at VEMI freshman year, designing 3D models in blender to aid the development of our VR environments. I quickly transitioned from that into iOS development creating applications later becoming the labs lead Swift developer. I lead the development of projects for Ultrahaptics and VAMs. Additionally, I was involved in giving lab tours, lead a lab meeting, and trained new developers.

Autonomous Vehicle Simulator

The autonomous vehicle simulator was the first 6 person fully autonomous vehicle (FAV) simulator Northeast America designed by the VEMI Lab. This fully autonomous vehicle platform is used to research how humans will interact with FAVs. I aided in the design and development of the simulator. Applications were developed in unity to simulate a city environment where I implemented the use of telemetry data to actuate and control the simulator. I then designed an iOS application and WebSocket server that the simulator would communicate to allowing for real-time feedback and control of the simulator on an iOS device.

Vibrotactile Audio Maps Library

The Vibrotactile Audio Maps library is based on previous research into the use of haptics to convey information effectively. The library allows the developer to create VAM objects, which are visual objects paired with an audio prompt and haptics. This audio and vibratory feedback provides users who are visually impaired with additional information. This software was utilized in multiple studies conducted at VEMI, currently with a published paper. This technology will enable future grants studying areas such as spatial navigation and learning modalities.

The VAM Library consists of two main applications, the VAM iOS Library and the VTGraphics desktop application. The VAM iOS Library is the Swift implementation of VAMs. This provides developers with an easy-to-use library for implementing VAMs in their iOS applications. The library gives the developer control to create custom vibrations and audio prompts-based user interaction.

The VTGraphics application provides researchers with an easy-to-use Windows desktop application designed in C++ to create VAMs. The researcher can customize vibrations, audio prompts, color, size, and position of each object. These drawings can be exported into a JSON file which can be imported into supported iOS applications.

Published Papers

Related Papers

Comparing Map Learning between Touchscreen-Based Visual and Haptic Displays: A Behavioral Evaluation with Blind and Sighted Users

Ultrahaptics

Ultrahaptics is a mid-air haptic device that uses ultrasonic transducers to create sensations in the air. This paired with a hand-tracking camera provided a unique user experience. Our main interest in the device was to determine its limitations as user interface for systems such as touchless kiosks or autonomous vehicles. Two studies were conducted one investigating a person's ability to perceive the haptic sensation and the other determining the ability to track items spatially. I worked in conjunction with the researchers to develop these studies using Unity.

Published Papers

Weasley Clock

The Weasley clock, inspired by a novel, was a project that used BLE technology to locate people within a building. I designed an iOS application using Firebase that allowed users to create an account associated with their Bluetooth device and pulled data from our local PHP server that determined the user's local location.

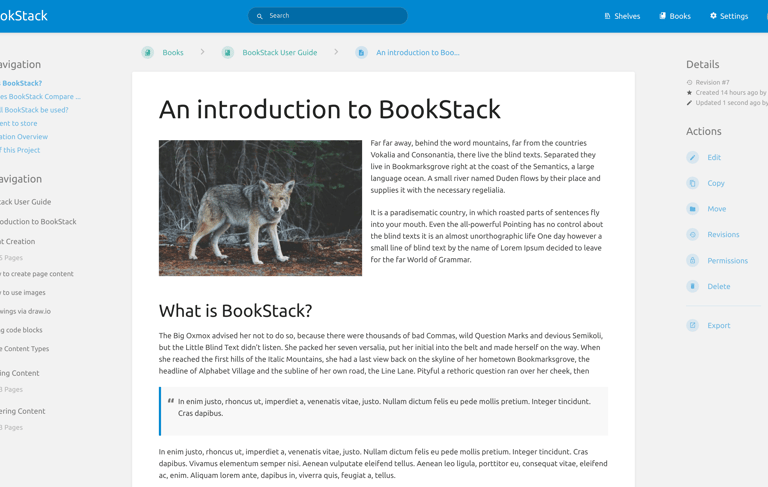

Bookstack

I played a key role in implementing project management tools and protocols for the lab, establishing a coding standard, and setting up Bookstack to streamline documentation management. I led meetings to introduce the Bookstack server to the staff, gathering feedback on its functionality and identifying improvements to better serve the lab's needs

Mozilla Hubz and Blender

For my first 3 months at VEMI, I was 3D modeling using Blender, where I built the University of Maine campus and developed an immersive environment for Mozilla Hubs, which was later used by the lab. I also worked on a project in A-Frame to explore the possibilities of web-based VR, utilizing the A-Frame JavaScript library to create an interactive VR space. Additionally, I created over 50 fully textured 3D models for a game environment, showcasing my expertise in modeling and texturing within Blender.

MSF 2024

Every year VEMI attends the Maine Science Festival. In 2024 we decided to create an educational game with a scavenger hunt for the kids to enjoy. With no budget, I created a React App with our graphics designers on GitHub pages for the visitors to play.

Check it out -> Click Me

The codes: 15070, 68978, 74343, 81294, 98765, 23564